SIDBench - A Python Framework for Reliably Assessing Synthetic Image Detection Methods

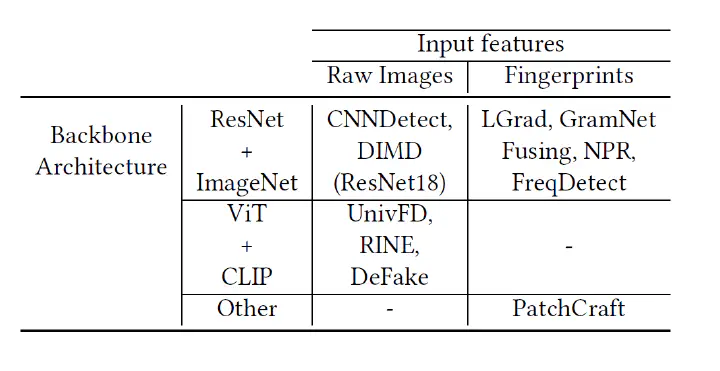

Categorizing the models integrated in SIDBench, based on the type of input and the backbone architecture.

Categorizing the models integrated in SIDBench, based on the type of input and the backbone architecture.

The problem

Generative AI is making it easier to create incredibly realistic synthetic images. Detecting these fake images is challenging, and several methods have been developed to tackle this issue. However, there’s often a big difference between how well these methods work in experiments and how they perform in real life.

To help bridge this gap, our recent work “SIDBench: A Python Framework for Reliably Assessing Synthetic Image Detection” introduces a new benchmarking framework that combines various state-of-the-art detection models. These models were chosen for their different features and network designs to cover a wide range of techniques. The framework uses the latest, highly realistic datasets and studies how common online image transformations, like JPEG compression, affect detection accuracy. This tool is available online and is designed to be easily updated with new datasets and detection models, making it a flexible and valuable resource for improving synthetic image detection.

The SIDBench Framework

To map the current landscape of Synthetic Image Detection (SID) methods, we reviewed recent literature and identified 11 state-of-the-art models since 2020. These models were evaluated based on two criteria: the type of input used (raw images or extracted features like frequency patterns and noise) and the architecture of the model. Popular architectures include ResNet50 pre-trained on ImageNet and ViT-L/14 pre-trained with CLIP.

Main Findings from SIDBench

Performance Across Different Models

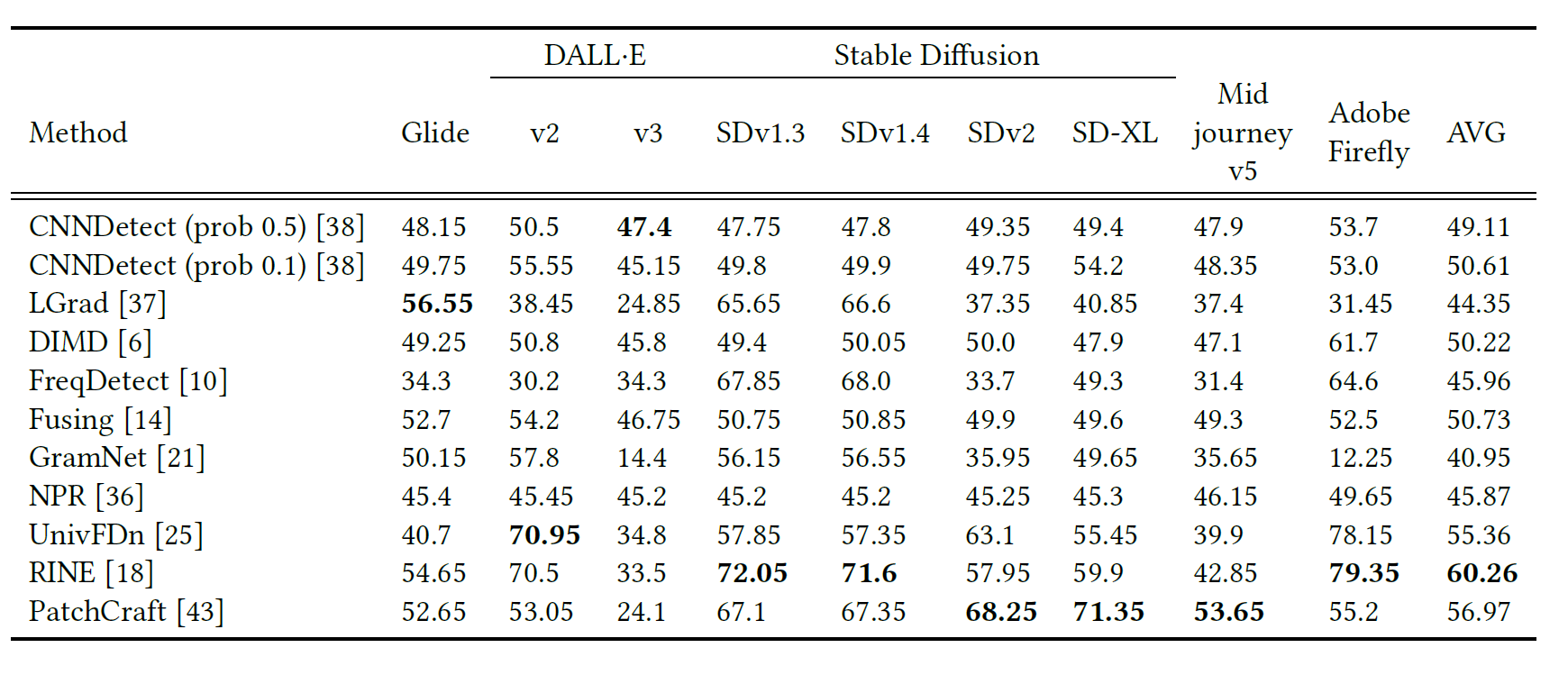

Our benchmark study verified that almost all models trained on GAN-generated images generalize well across different GANs. However, their performance drops significantly when applied to Diffusion Models (DMs), such as Guided Diffusion, Latent Diffusion, and Glide. Notably, some models like RINE and PatchCraft perform surprisingly well on DMs.

Calibration for Improved Accuracy

We discovered that accuracy could be further enhanced by calibrating the threshold used for classifying images. This suggests there is room for further improvement of performance.

Real-World Performance Evaluation

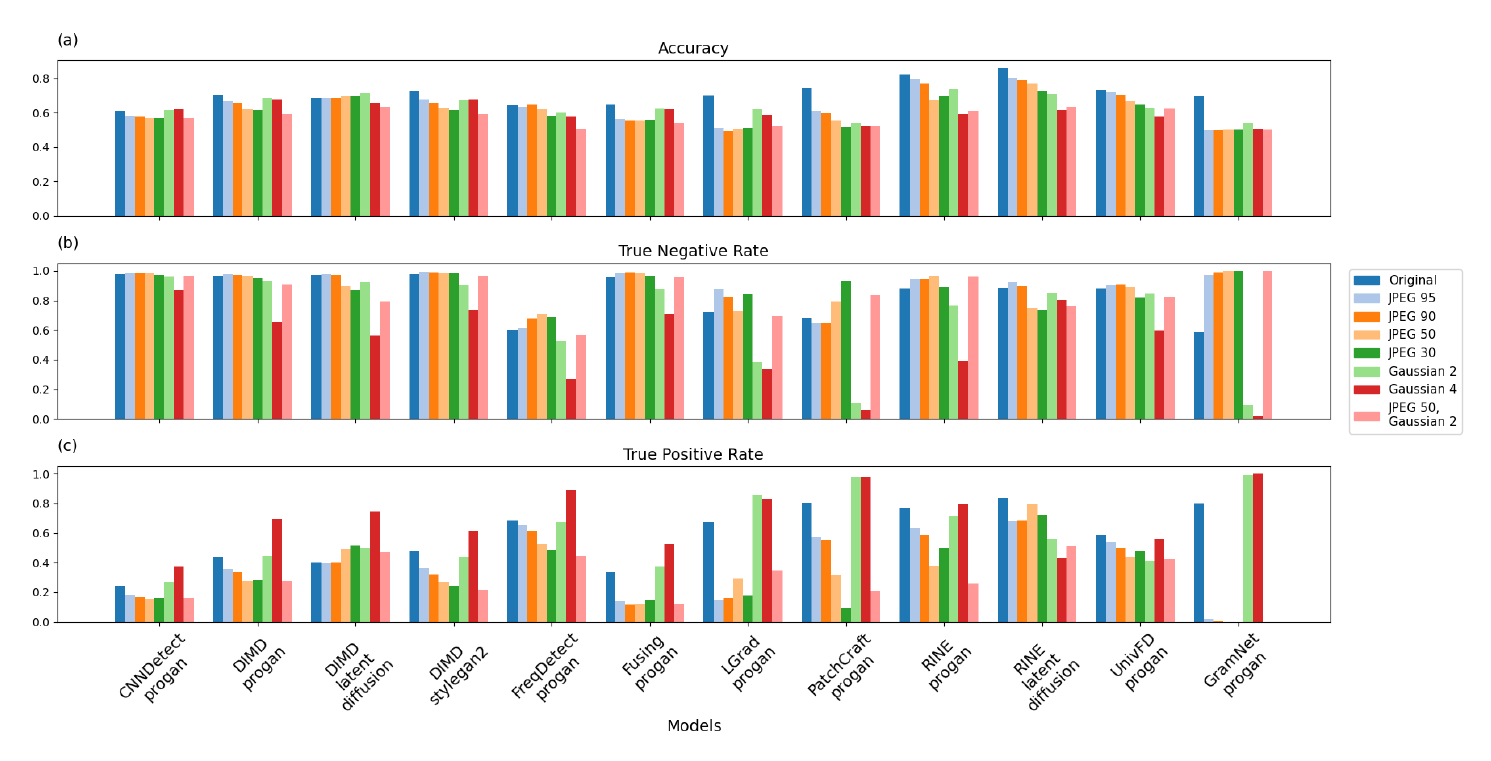

To assess real-world applicability, we evaluated ProGAN-trained models using the Synthbuster dataset. Results showed a significant drop in performance across all models, highlighting the challenge of maintaining accuracy outside experimental conditions, with high-resolution, high quality images. The following table presents the overall accuracy for all proGAN-trained detectors.

Impact of Training Datasets

To explore the impact of training datasets, we included models trained on sources other than ProGAN. For instance, RINE, trained on Latent Diffusion, showed improved detection of DM images while retaining good performance on GAN-based images. Additionally, models trained on DM images yielded better results on challenging subsets of Synthbuster, such as images generated with the challenging Midjourney v5 algorithm.

Handling Image Preprocessing (Resizing and Cropping)

When using pre-trained models, images can be either cropped to a predefined size or resized. Each method has its trade-offs: cropping can lose important information outside the cropped area, while resizing may introduce distortion, affecting performance. For example, resizing alters frequency components, which is crucial for models like FreqDetect that rely on frequency artifacts. Our findings indicate that, on average, performance remains relatively consistent regardless of the preprocessing method. However, the standard deviation in performance suggests that resizing versus cropping impacts each model differently across the Synthbuster datasets.

Influence of Image Transformations

Image transformations like Gaussian blurring and JPEG re-compression negatively affect the performance of SID models. Our tests revealed that Gaussian blurring and JPEG re-compression generally reduce accuracy (ACC), with varying impacts on true positive rates (TPR) and true negative rates (TNR). Gaussian blurring tends to increase TPR at the expense of TNR, causing models to detect more images as fakes, including some real ones. However, when combined with JPEG re-compression, this behavior changes, indicating a need for further investigation. JPEG compression often impacts TNR less than TPR, except in some models where it leads to a complete inability to detect fake images.

The content of this post is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License (CC BY-NC-SA 4.0).